China's Mixed Stacks Are the New Normal

What happened and when

DeepSeek’s R2 is delayed. In August, the Financial Times reported that Chinese authorities encouraged the company to prioritize Huawei’s Ascend processors over Nvidia for training. After repeated instability and efficiency problems on Ascend and despite assistance from Huawei engineers, DeepSeek reverted to Nvidia for training.

Here is a quick timeline for context:

September 2022: The U.S. requires licenses for exports of Nvidia’s A100 and H100 to China.

October 2023: The U.S. expands export controls, blocking Nvidia’s A800 and H800, chips made for the Chinese market, to comply with prior limits.

January 20, 2025: DeepSeek releases R1 under an MIT license while the technical report appears on arXiv the same week.

April 2025: The U.S. Commerce Department imposes export license requirements on Nvidia’s H20 and AMD’s MI308, and any comparable parts, so shipments to China need case-by-case U.S. approval.

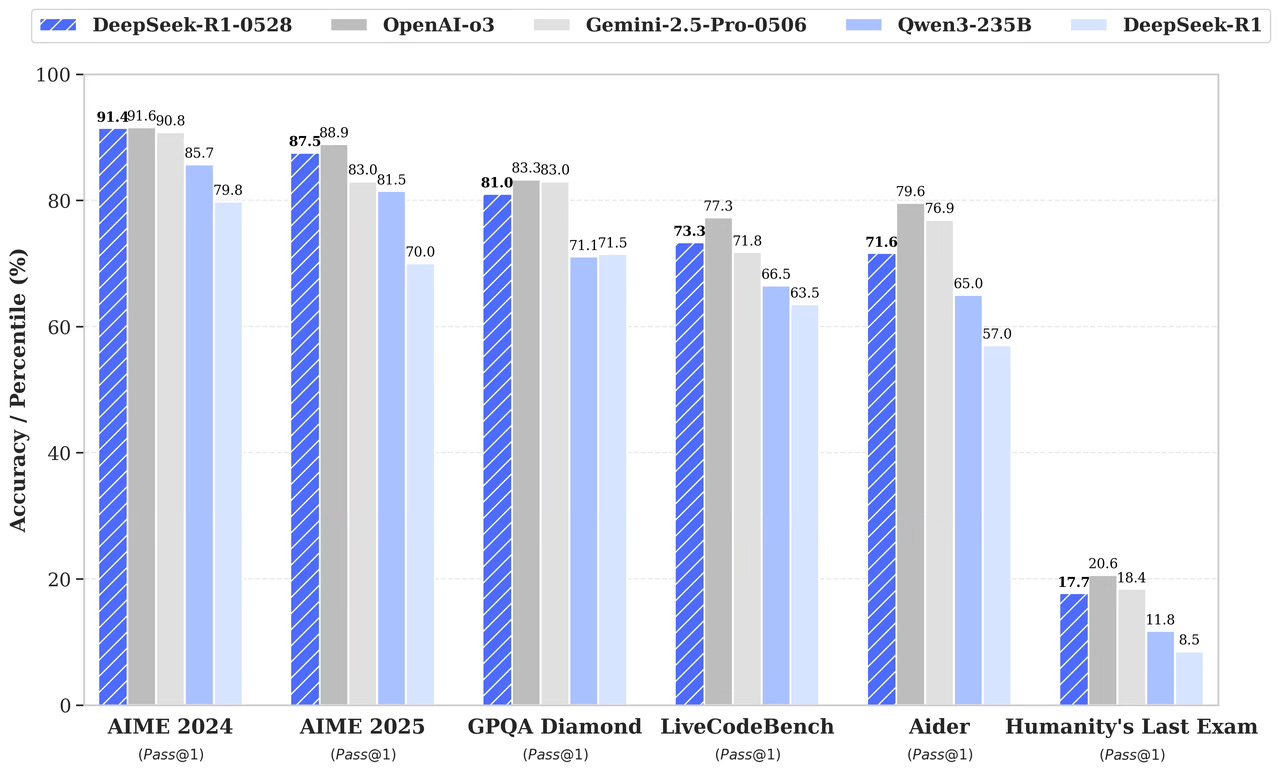

May 28, 2025: DeepSeek ships the R1-0528 update with improved benchmarks.

August 2025: The administration signals a narrow reopening under unusual, closely monitored terms. In the same period, China’s regulators push in the opposite direction, urging firms to avoid H20 in sensitive contexts and to justify purchases.

Also in 2025: The competition in China accelerates. Moonshot’s Kimi K2 and Zhipu’s GLM-4.5 ship open weights with strong claims on coding and agentic workloads. Alibaba rolls out Qwen 3 updates, including Qwen3-coder.

By late June 2025: Reporting indicates CEO Liang Wenfeng is not satisfied with R2’s performance.

Why training is harder than inference

Training frontier LLMs is a full-stack problem across hardware, systems, and tooling. Because CUDA, cuBLAS, cuDNN, NCCL and the profilers are so mature, migrating a production training stack isn’t plug-and-play.

Three friction points in particular come to mind:

Kernel & framework maturity. CANN/MindSpore and PyTorch-Ascend have moved fast, but long runs still hit kernel and toolchain edge cases, which are costly at large batches and long context.

Attention implementations. FlashAttention-style kernels and mixed precision need retuned tiling, numerics, and scheduling for Ascend’s vector units, memory hierarchy, and DMA engines.

Tooling & graph modes. MindSpore’s static graphs can be quick, but the compile-debug loop differs from PyTorch’s dynamic style. Add MoE/custom ops, and both compile-time and runtime friction go up.

By contrast, inference ports are more easily accessible. Community engines and vendor stacks (e.g., vLLM serving on Ascend) are maturing fast. Serving migrations are completed in weeks, rather than requiring multi-month training with tight failure budgets.

The mixed stack is pragmatic, not ideological. Train on Nvidia when you can, and serve on Ascend when you must.

Washington’s narrow reopening and Beijing’s wider closing

The U.S. posture since April has been to require licenses for shipments of Nvidia’s H20 and AMD’s MI308 and, by August, to begin issuing some of those licenses while signaling openness to tightly controlled, scaled-down next-gen parts. Beijing’s resolve since August 2025 has been to discourage H20 purchases in government-adjacent and sensitive sectors, then tighten enforcement at the platform/regulatory level.

A practical implication: even when the license opens, compliance costs, volume caps, and political signals can keep the pressure low. Any reopening is partial and contingent by design.

China’s chip progress: real, uneven, and non-linear

It’s easy to caricature Huawei’s Ascend line as “not H100.” That misses the point. Independent analyses and vendor disclosures point to steady gains in China’s domestic accelerators with ambitions to scale output, but progress remains constrained by yields and tight HBM supply. Ascend hardware shows compelling raw throughput. The deficit is in software/tooling and predictable stability over months-long training. But that gap is slowly shrinking.

On systems, Huawei is pushing large multi-node “supernode” interconnects and richer graph-level control. If those systems deliver stable, linearish scaling for LLM training, the training picture could look very different on a 12-24-month horizon. You don’t need to beat Nvidia outright to reduce functional dependence on U.S./Taiwan compute. You need “good enough” plus software maturity.

The chokepoint shifts and why Taiwan risk rises for the U.S.

Nvidia’s most advanced datacenter GPUs are fabricated and packaged by TSMC in Taiwan. Even as Nvidia migrates packaging technologies, the near-term center of gravity for advanced AI chipmaking remains on the island. U.S. fabs are coming online, but not fast enough to change the next few cycles.

Meanwhile, China is compressing its functional dependence by forcing workloads onto domestic accelerators, especially for inference, and eventually for training as toolchains mature. The chokepoint that used to bind both sides now binds one side more than the other. That asymmetry is why any disruption in Taiwan becomes more consequential for U.S. AI than for China’s, relative to 2023.

DeepSeek’s position: engineering debt, competitive pressure, and cost narratives

The DeepSeek moment rewired expectations by claiming nearly frontier reasoning performance at a radically lower training cost. Whether you accept the exact cost figure, the company placed a target on its back: repeat the feat under tighter controls, with rivals shipping fast, and with your training stack changing underneath you.

On the engineering side, the Ascend detour likely consumed months of senior time: stabilizing kernels, debugging collectives, retuning attention kernels for NPU memory hierarchies, wrestling with static-graph compilation, and retraining staff. On the market side, mindshare is path-dependent. Whoever ships runnable checkpoints with a simple inference path, clear docs, and responsive maintainers wins the next round of downstream innovation. In 2025, that’s the currency.

What the hybrid pattern signals

With the mixed stack, Chinese labs can keep shipping in an environment where (1) export licenses make Nvidia availability uncertain, (2) domestic policy rewards workloads on Chinese accelerators, and (3) time-to-market penalizes ideological purity.

Expect more mixed stacks: CUDA for the heavy training runs when available, Ascend for inference at scale, especially in regulated or state-linked contexts. The shift will feel incremental until it suddenly doesn’t, because the ecosystem’s defaults will have moved.

What the U.S. reopening actually changes

The H20 licensing window and any scaled-down next-gen parts matter commercially concerning:

Nvidia’s China revenue mix

Availability for multinationals operating in China

Competitive pressure on domestic accelerators

Strategically, Beijing’s counter-policy, discouraging and then disallowing big-tech purchases, blunts the effect. Over time, the lesson for Chinese buyers is to avoid dependency on policy changes and to bet on domestic roadmaps.

There is also a supply-chain irony: chipmakers may over-order in anticipation of reopening, only to find demand throttled by domestic policy on the buyer side. That whiplash accelerates bifurcation.

The Taiwan situation

One uncomfortable conclusion underlies all of this. The U.S. AI stack, here referring to public clouds, hyperscale labs, and most enterprise deployments, remains bound to TSMC for advanced GPUs and packaging. That doesn’t change in the next 6-12 months. China, meanwhile, is reducing its functional dependence by pushing inference and, progressively, training onto domestic accelerators, even if peak performance trails.

China’s chip progress is making Beijing less vulnerable to a Taiwan shock at the exact moment America’s AI stack remains tied to Taiwan’s fabs. That asymmetry increases the strategic price of any disruption in Taiwan for us, even if China’s chips still lag peak Nvidia performance.

What matters most from here

First, the rate of technical progress relative to time. Huawei’s toolchains and research output are improving quickly. If Ascend-native attention, stable multi-month runs, and friendlier developer experience converge, the hybrid pattern will tilt further toward “do more domestically.”

Second, policy clarity. If the U.S. channel stays narrow and China’s Nvidia posture remains tight, developer defaults in China will normalize to Ascend engines. That’s how ecosystems lock in: through defaults, not regulations.

Third, Taiwan exposure. Unless there’s a major shift in U.S. domestic packaging/foundry capacity for AI accelerators, the American AI economy remains acutely exposed to Taiwan. As China lowers its exposure by moving more of the workload home, the strategic imbalance grows.